How much does it matter which hospital you go to? Of course, it matters a lot – hospitals vary enormously on quality of care, and choosing the right hospital can mean the difference between life and death. The problem is that it’s hard for most people to know how to choose. Useful data on patient outcomes remain hard to find, and even though Medicare provides data on patient mortality for select conditions on their Hospital Compare website, those mortality rates are calculated and reported in ways that make nearly every hospital look average.

How much does it matter which hospital you go to? Of course, it matters a lot – hospitals vary enormously on quality of care, and choosing the right hospital can mean the difference between life and death. The problem is that it’s hard for most people to know how to choose. Useful data on patient outcomes remain hard to find, and even though Medicare provides data on patient mortality for select conditions on their Hospital Compare website, those mortality rates are calculated and reported in ways that make nearly every hospital look average.

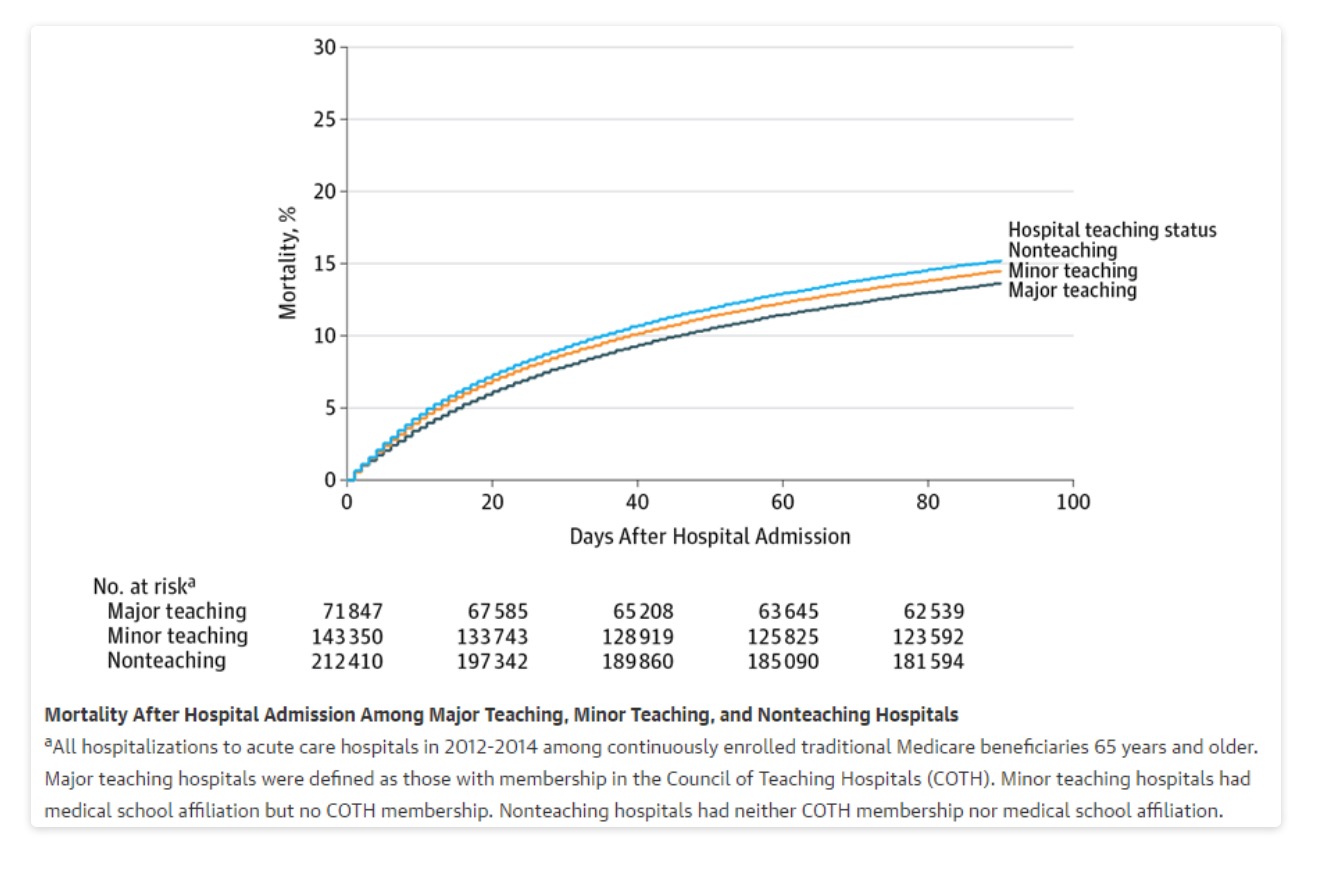

Some people select to receive their care at teaching hospitals. Studies in the 1990s and early 2000s found that teaching hospitals performed better, but there was also evidence that they were more expensive. As “quality” metrics exploded, teaching hospitals often found themselves on the wrong end of the performance stick with more hospital-acquired conditions and more readmissions. In nearly every national pay-for-performance scheme, they seemed to be doing worse than average, not better. In an era focused on high-value care, the narrative has increasingly become that teaching hospitals are not any better – just more expensive.

But is this true? On the one measure that matters most to patients when it comes to hospital care – whether you live or die – are teaching hospitals truly no better or possibly worse? About a year ago, that was the conversation I had with a brilliant junior colleague, Laura Burke. When we scoured the literature, we found that there had been no recent, broad-based examination of patient outcomes at teaching versus non-teaching hospitals. So we decided to take this on.

As we plotted how we might do this, we realized that to do it well, we would need funding. But who would fund a study examining outcomes at teaching versus non-teaching hospitals? We thought about NIH but knew that was not a realistic possibility – they are unlikely to fund such a study and even if they did, it would take years to get the funding. There are also some excellent foundations, but they are small and therefore, focus on specific areas. Next, we considered asking the American Association of Medical Colleges (AAMC). We know these colleagues well and knew they would be interested in the question. But we also knew that for some people – those who see the world through the “conflict of interest” lens – any finding funded by AAMC would be quickly dismissed, especially if we found that teaching hospitals were better.

Setting up the rules of the road

As we discussed funding with AAMC, we set up some basic rules of the road. Actually, Harvard requires these rules if we receive a grant from any agency. As with all our research, we would maintain complete editorial independence. We would decide on the analytic plan and make decisions about modeling, presentation, and writing of the manuscript. We offered to share our findings with AAMC (as we do with all funders), but we were clear that if we found that teaching hospitals were in fact no better (or worse), we would publish those results. AAMC took a leap of faith knowing that they might be funding a study that casts teaching hospitals in a bad light. The AAMC leadership told me that if teaching hospitals are not providing better care, they wanted to know – they wanted an independent assessment of their performance using meaningful metrics.

Our approach

Our approach was simple. We examined 30-day mortality (the most important measure of hospital quality) and extended our analysis to also examine 90 days (to see if differences between teaching and non-teaching hospitals persisted over time). We built our main models, but in the back of my mind, I knew that no matter which choices we made, some people would question them as biased. Thus, we ran a lot of sensitivity analyses, looking at shorter-term outcomes (7 days), models with and without transferred patients, within various hospital size categories, and with various specification of how one even defines teaching status. Finally, we included volume in our models to see if volume of patients seen was driving differences in outcomes.

The one result that we found consistently across every model and using nearly every approach was that teaching hospitals were doing better. They had lower mortality rates overall, across medical and surgical conditions, and across nearly every single individual condition. And the findings held true all the way out to 90 days.

What our findings mean

This is the first broad, post-ACA study examining outcomes at teaching hospitals, and for the fans of teaching hospitals, this is good news. The mortality differences between teaching and non-teaching hospitals is clinically substantial: for every 67 to 84 patients that go to a major teaching hospital (as opposed to a non-teaching hospital), you save one life. That is a big effect.

Should patients only go to teaching hospitals though? That is wholly unrealistic, and these are only average effects. Many community hospitals are excellent and provide care that is as good if not superior to teaching institutions. Lacking other information when deciding where to receive care, patients do better on average at teaching institutions.

Way forward

There are several lessons from our work that can help us move forward in a constructive way. First, given that most hospitals in the U.S. are non-teaching institutions, we need to think about how to help those hospitals improve. The follow-up work needs to delve into why teaching hospitals are doing better, and how can we replicate and spread that to other hospitals. This strikes me as an important next step. Second, can we work on our transparency and public reporting programs so that hospital differences are distinguishable to patients? As I have written, we are doing transparency wrong, and one of the casualties is that it is hard for a community hospital that performs very well to stand out. Finally, we need to fix our pay-for-performance programs to emphasize what matters to patients. And for most patients, avoiding death remains near the top of the list.

Final thoughts on conflict of interest

For some people, these findings will not matter because the study was funded by “industry.” That is unfortunate. The easiest and laziest way to dismiss a study is to invoke conflict of interest. This is part of the broader trend of deciding what is real versus fake news, based on the messenger (as opposed to the message). And while conflicts of interest are real, they are also complicated. I often disagree with AAMC and have publicly battled with them. Despite that, they were bold enough to support this work, and while I will continue to disagree with them on some key policy issues, I am grateful that they took a chance on us. For those who can’t see past the funders, I would ask them to go one step further – point to the flaws in our work. Explain how one might have, untainted by funding, done the work differently. And most importantly – try to replicate the study. Because beyond the “COI,” we all want the truth on whether teaching hospitals have better outcomes or not. Ultimately, the truth does not care what motivated the study or who funded it.