Our Experience on Facebook Offers Important Insight Into Mark Zuckerberg’s Future Vision For Meaningful Groups

By ANDREA DOWNING

Seven years ago, I was utterly alone and seeking support as I navigated a scary health experience. I had a secret: I was struggling with the prospect of making life-changing decisions after testing positive for a BRCA mutation. I am a Previvor. This was an isolating and difficult experience, but it turned out that I wasn’t alone. I searched online for others like me, and was incredibly thankful that I found a caring community of women who could help me through the painful decisions that I faced.

As I found these women through a Closed Facebook Group, I began to understand that we had a shared identity. I began to find a voice, and understand how my own story fit into a bigger picture in health care and research. Over time, this incredible support group became an important part of my own healing process.

This group was founded by my friends Karen and Teri, and has a truly incredible story. With support from my friends in this group of other cancer previvors and survivors I have found ways to face the decisions and fear that I needed to work through.

Our Support Group is

a Lifeline. And We’re Not Alone.

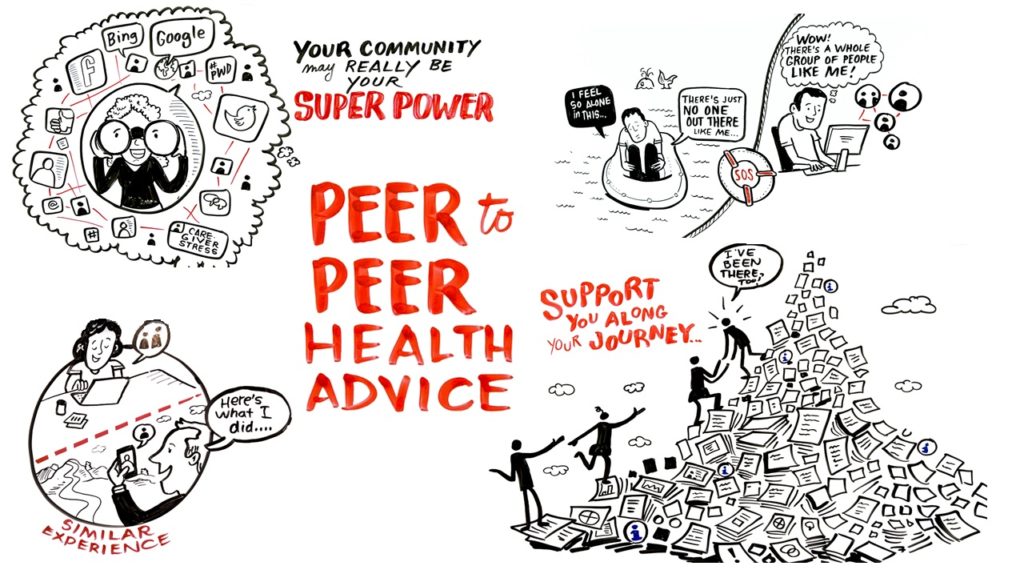

As group of cancer previvors and survivors we’re not alone. Millions of people go online every day to connect with others who share the same health challenges and to receive and provide information and support. Most of this happens on Facebook. This act of sharing stories and information with others who have the same health condition is called peer support. For many years there has been a growing body of evidence that peers seeking information from each other can and do improve the way they care for themselves and others. Today many of these peer support groups exist on Facebook.

Our Support Group Is Trapped. We Cannot

Leave.

I know what anyone reading this might be thinking if you have experienced a peer support group. After all the terrible news about Facebook and privacy, why would ANYONE share sensitive or private health information on Facebook?!

The truth is: we really have no choice. We’re trapped. Many of these health communities formed back before we understood the deeper privacy problems inherent in digital platforms like Facebook. Our own group formed back in 2009 when Facebook was the “privacy aware” alternative to MySpace. And because they grew so big the network effect becomes very strong; patients must go where the network of their peers live. We started out as a small collection that organically grew over time to become bigger and more organized. This dilemma of the network effect is illustrated beautifully in an Op-Ed by Kathleen O’Brian, the mother of a child with autism who relies on her own peer support group and who wishes that she could jump ship but cannot leave.

People turn towards peer support groups when

we fall through the medical cracks of the healthcare system.

When facing the trauma of a new cancer diagnosis and/or genetic test results,

the last thing on your mind is whether you should be reading 30 page privacy

policies that tech platforms require. Rather, patients need access to

information. Patients need it fast. We need it from people who have been down

the same path and who can speak from personal experience. And that information

exists within these peer support groups on Facebook. We need to be protected

when we are vulnerable to those who can use information about out health

against us.

Our awakening to

deep cybersecurity problems.

My own experience with peer support groups

took a terrifying turn last April. After the news of Cambridge Analytica broke

in headlines, I asked myself a simple question: what are the privacy implications

of having our cancer support group on Facebook?

As a geek with a professional background in tech, I thought it might be fun to do some research after looking at the technical details of what happened with Cambridge Analytica. As I looked at the developer tools on Facebook’s platform, I began to get concerned. Not long after this initial research, I was lucky enough to meet Fred Trotter, a leading expert in health data and cybersecurity. I shared this research with Fred. What followed next for me was a crash course in cybersecurity, threat modeling, coordinated disclosure, and learning about the laws that affected our group. Fred and I soon realized that we had found a dangerous security flaw that scaled to all closed groups on Facebook.

Since discovering these problems and

navigating submission of this vulnerability to Facebook’s security team, our

group has been desperately seeking a feasible path forward to find a safer

space. We have awakened to the deeper issues that created breach after breach

of data on Facebook. It seems like every day we hear about a new data breach and

a new apology from Facebook.

Our trust is gone. But we’re still trapped.

The lasting impact

of peer support group privacy breaches

When health data breaches occur, members of vulnerable support groups like ours are at risk of discrimination and harm. Women in our own support group can lose jobs and healthcare when health information generated on social media is used to make decisions about us without our knowledge or consent. For example, health insurers are buying information about my health — and potentially can use this to raise my rates or deny coverage. And 70% of employers are using social media to screen job candidates.

For me, these security problems raise

questions about the lasting impact on our group when data is shared without our

knowledge or consent. Without transparency and accountability from these tech

companies on their data-sharing practices, how will we ever know what decisions

are being made about us? If the data generated in the very support groups these

patients need to navigate the trauma of a health condition is used against

group members who is being held accountable?

There is a stark contrast between Facebook’s rhetoric about “meaningful groups” and our current reality. We are trapped. Who is protecting these vulnerable groups? Who is being held accountable if and when the privacy and data generated by these groups are breached and used against their members? What are the solutions that give us the ability to trust again?

Does Our Support

Group Have Any Rights?

Over this past year we have done a lot to try

and understand what are rights are. Digital rights for groups like my own

really do not exist. I have been reflecting on how when someone is arrested a

police officer will read someone their Miranda rights.

“You have the right to remain silent. Anything you say

can and will be used against you.”

This is really our only right at the moment.

These words keep repeating in my mind as I think about our group’s current

predicament of what to say and not to say about health on social media. From

the perspective of a cancer support group, it seems we’ve reached a point where

anything we share on Facebook can be used against us… by third parties without

our knowledge or consent. As we lose our trust, we stop engaging. We stop

trusting that it is safe to share things with each other in our group. We

become silent. Moreover, our group cannot simply pick up and leave. Where would

we go? What happens to the 10 years of work and resources that we created on

Facebook, which we would lose? How do we keep the same cycle from repeating on

a new platform?

At the root of this problem there are gaping holes in consumer privacy rights that might protect our group. While there are rules about health data breaches from the FTC there has been no enforcement to date. We are watching and waiting to see what the FTC might do. And while health information shared in hospitals, clinics, and doctors’ offices is protected by HIPAA, no such protection applies to the enormous amount of personal health information provided to social networks every day. The millions of people who convene through support groups are in a highly vulnerable position, and are currently powerless to change the dynamic to one in which they have protections and rights.

Congress and the FTC have held numerous hearings about a path forward to protect consumer data privacy, and a central theme for these dialogues is what to do about Facebook. There have been hearings upon hearings held by the FTC on consumer privacy in the 21st century. Recent hearings in Congress include those at the Senate Commerce Committee. While these hearings show a generalized desire to enact meaningful change, and some recognition of the urgency of the problem, I cannot help but notice the lack of representation in these dialogues from actual consumers who are affected by these privacy problems. I have held onto hope that there would be meaningful policy discussion about how to protect these vital peer support communities, but realize that we must help ourselves.

“We Take Your

Group’s Privacy Very Seriously.”

Last year, we started a dialogue with Facebook’s teams after submitting our security vulnerability via the white hat portal. I heard over and over again from people at Facebook: “we take your privacy very seriously.” But Facebook never publicly acknowledged or fully fixed the security problems created within their group product. In fact, Facebook directly denied that there was ever a privacy and security problem for our groups.

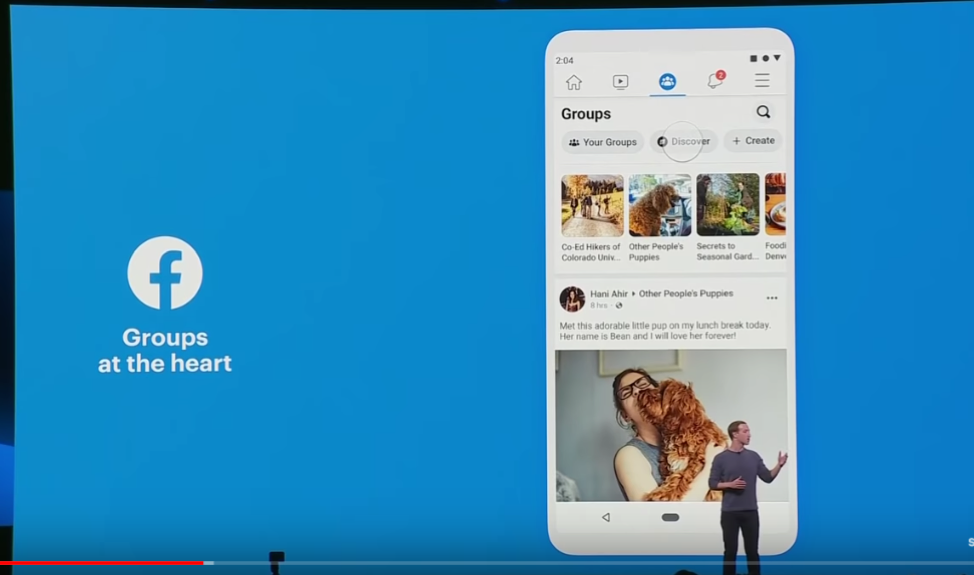

Given this experience, you can imagine my surprise this week when Mark Zuckerberg announced his big new plans for Facebook. After a heartwarming commercial of a twenty-something finding her people in meaningful groups, Zuck walks onto the stage and declares: “The future is private.”

Our support

group had reasonably expected the present and past to be private too.

Watching the F8 Summit my heart sank. It seems we must all submit to this future that Facebook imagines for us. A future where problems and abuse in Silicon Valley are swept under the carpet. Where no one is accountable. A future where exploitation of our data lurks just underneath the surface of all the heart-warming rhetoric and beautiful design for meaningful groups. Currently Facebook Groups have one billion users per month. Our trapped group is just one example of so many that are at the heart of Facebook’s future as a company.

These groups go beyond health to others seeking support for a shared identity. Active duty military. Survivors who have lost a loved one. Moms needing support from other moms. Cybersecurity professionals. In extreme cases the information in vulnerable groups can be weaponized. For example there were groups for the Rohingya in Myanmar and groups to support sexual assault survivors that are now quiet or have been deleted.

It seems that the data that has made this

company so wealthy is still a priority over our security and safety. I quietly

watch the reactions to the latest Facebook event, and the lack of any responsibility

to the people in groups like my cancer support group.

We Cannot Remain

Silent

When I think about my support group of cancer

previvors and survivors, I feel strong and brave. I fear retaliation writing

this because we are truly vulnerable on the platform where we reside. Yet, we

can’t remain silent. We don’t want any more empty promises from the technology

platforms where we reside. We would rather not be appeased with shiny new

features and rhetoric about privacy.

Rather,

we seek autonomy. We seek a way to take our own power

back as a group. We seek to protect our shared identity as a group and make

decisions collectively. We seek to protect any data that is shared. There is

something truly unique about the shared identity of our support group: we have

always done things on our own terms. We are ten thousand women who have faced

really hard realities about our future.

Facebook did not create our incredible groups.

We did. We’ve worked hard for ten years cultivating this online group for a

simple reason: we wanted our group to

feel less afraid and alone than we felt in the beginning. Facebook does not

have a monopoly on any vision for our future. The data generated within these

groups is not an abstraction to us. It represents generations of suffering. Our

own suffering. Our families’ suffering. We have an urgent need to develop a new

way forward that protects our identity, and the future of our groups. We will

create the future we choose for this community. That future exists with or

without Facebook.

If you are in the same boat, please reach out to us here.

Andrea Downing. Previvor | Community Data Organizer | Accidental Security Researcher. This post originally appeared on Tincture here.